Melody Generation Using GPT

Advancing Music Composition Through Transformer-Based Models

Introduction

The Melody Generation Using GPT project applies state-of-the-art transformer architectures, inspired by NLP advancements, to generate sequences of musical notes. This approach involves encoding melodies into tokenized representations, training GPT models, and synthesizing new compositions that mimic human-like creativity.

By adapting Generative Pre-trained Transformers for music, this project demonstrates the versatility of AI in creative domains.

The full implementation, including source code, datasets, and training configurations, is available on GitHub.

The Vision

This project seeks to explore AI's role in music composition by answering the following questions:

- Can transformer-based models generate musically coherent sequences?

- How do architectural variations impact melody quality?

- What quantitative and qualitative metrics best evaluate AI-generated music?

By tackling these questions, the project aims to provide insights into how deep learning can be used as a tool for musicians and composers.

"Transformers redefine creativity by turning data into art."

Technical Workflow

The project is structured into the following stages:

- Data Preparation: Musical sequences are extracted from MIDI files, tokenized into sequences, and augmented to enhance diversity. The dataset comprises structured tokens such as note durations, pitches, and rests.

- Model Training: Custom GPT architectures (Original, Deeper-Thinner, and Wider-Shallower) are trained on tokenized data. Hyperparameters such as embedding dimensions, number of attention heads, and layers are tuned to optimize performance.

- Evaluation: Metrics such as perplexity, BLEU scores, and loss functions measure the models' ability to replicate patterns and coherence in music.

- Generation: The trained models synthesize melodies by sampling from the learned probability distributions.

The training was conducted on high-performance GPUs, leveraging PyTorch for implementation and Weights & Biases for experiment tracking.

Model Architectures

The project explores three configurations of GPT models, each tailored for specific trade-offs:

- Original Configuration: Balanced dimensions with two attention layers and moderate embedding size.

- Deeper-Thinner Configuration: Narrow embedding dimensions but with more attention layers to enhance sequence depth.

- Wider-Shallower Configuration: Broader embedding dimensions but fewer layers to emphasize parallel learning of features.

The final model parameters range from ~23,000 to ~31,000, allowing for lightweight deployment without compromising on quality.

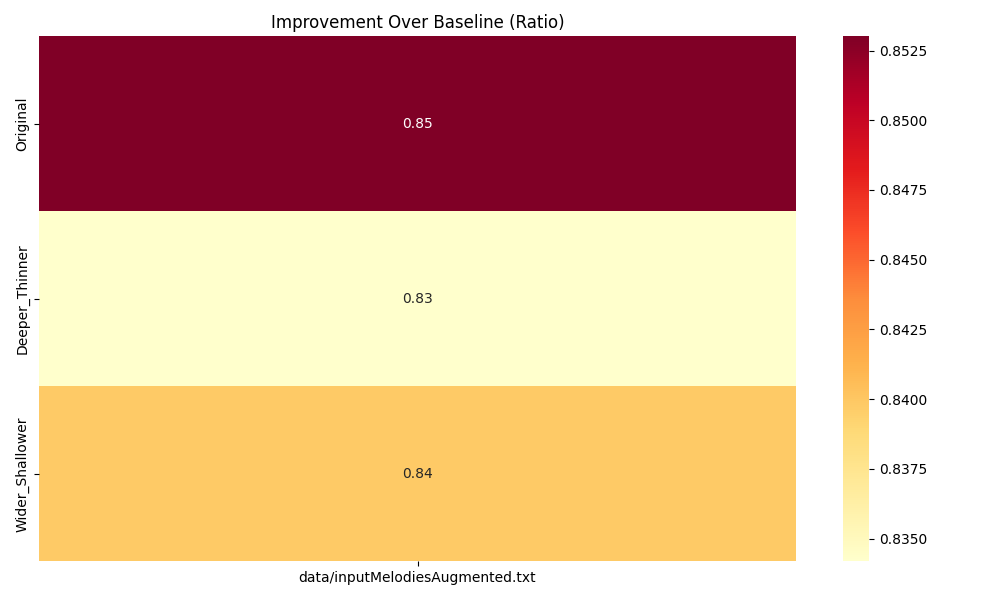

Evaluation and Results

To evaluate the generated melodies, we used:

- Perplexity: Measures the uncertainty in the model's predictions. The lower perplexity for the Original model indicates better learning stability compared to the alternative configurations.

- BLEU Score: Quantifies similarity between generated sequences and ground truth melodies. The Original configuration achieved the highest score of 0.64, reflecting its superior ability to replicate musical structures.

- Overfitting Metric: Tracks the divergence between training and validation losses to ensure generalization.

The generated samples demonstrated coherence in note transitions and adherence to learned patterns. However, subjective evaluation revealed slight limitations in rhythmic variations for deeper models.

Generated Samples

Explore and compare the melodies generated by each configuration below:

- Original Configuration:

- Deeper-Thinner Configuration:

- Wider-Shallower Configuration:

The comparison of the improvement ratio for different configurations is visualized below:

Significance and Future Scope

This project highlights AI's transformative role in music composition. Future work can expand upon this foundation to include:

- Integration with music theory constraints for stylistic control.

- Fine-tuning on genre-specific datasets to specialize in styles like jazz or classical.

- Real-time melody generation for live performances.

"AI-generated music has the potential to inspire human creativity in ways never imagined."

Developed by Aryan Singh. Explore the full implementation on GitHub. For inquiries, connect on LinkedIn.